In this age of information overload, the challenge is not about accessing data but filtering it. Whether you’re a student or a professional, you’re surrounded by all kinds of information in the form of emails, reports, articles, social media updates and more – you’re literally overwhelmed by the sheer volume of data, but what is relevant to you and what is not is the question.

You may not need every single detail in a 25-page report on current marketing trends, or a statistical report on the progression of reengineering work in your society; you just want the essential data, and this is where AI summarization comes to your aid.

But have you wondered how AI knows what’s relevant and what’s not? What parameters does AI use to provide you with the information that it does?

The answer is both simple and complex. AI uses machine learning (ML) algorithms and natural language processing (NLP) to get this done. But does it do this on its own? No. All AI summarization tools must be trained on large datasets for them to ‘learn’ patterns.

Let’s take a deeper dive into the step-by-step summary generation process.

How to Train AI for Text Summarization

- Data Preparation

It all begins with data. Any AI model is only as good as the data it is trained on.

AI summarization models are trained on many datasets and their corresponding summaries. Training models are chosen based on what the tool would be ultimately used for, and this could include academic research papers with their abstracts, news articles paired with their headlines, or even customer reviews with their star ratings.

The accuracy and effectiveness of the summarization model would depend on the quality of the training data, and hence it is important to pre-process the data used for training to remove any noise or other discrepancies like blurry text, misaligned text boxes etc.

- Text Processing

Before using the text for training, it needs some preparation, and this could involve:

- Normalization: This process involves changing all text to lowercase and root forms and removing all punctuations (e.g. “Walking” changed to “walking”).

- Tokenization: All text would be broken down to individual words or meaningful units like phrases.

- Stop Word Removal: This is the process of removing common words that don’t add value to the text like “the,” “is,” “a” etc.

- Feature Selection

AI summarization models don’t just randomly pick sentences, but they analyze context, frequency and placement of ideas in a text. This is called feature engineering, and it could involve:

- Word Frequency: The AI algorithms would analyze how often a word appears in the text.

- Part-of-speech Tags: This involves the identification of nouns, verbs, adjectives etc. in the text.

- Named Entity Recognition: This is the process of identifying all the proper nouns like names of people, places or organizations in the text.

- Sentence Position: This is understanding the significance of words or sentences at the beginning or end of the text. For example, an executive summary would be given more importance than the conclusion in a document.

- Model Selection and Training

Text summarization with AI uses various architectures like GPT, PEGASUS and BART models, which are complex neural networks that are adept at understanding the relationships between words in a sentence. Data engineers choose one of these models to train the AI tool to identify patterns between the original text and its corresponding summary. The neural network improves its ability to generate summaries with greater accuracy by adjusting the internal weights and connections.

For example, while summarizing news articles, key points that answer who, what, where and when are given importance, whereas summary of emails would prioritize action items and deadlines.

- Summary Generation

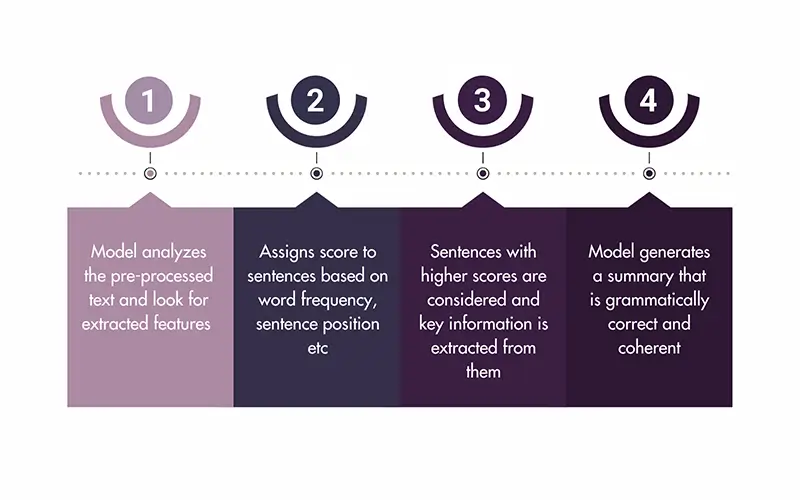

The models, after being trained in many datasets, become ready to generate summaries for new, unseen text.

The different steps involved in the generation of summary is as follows:

- AI summary refinement techniques

Some AI summarization tools incorporate additional steps to refine the generated summary. This could involve:

- Redundancy Removal: This process removes unwanted and repeated information from the summary.

- Sentence Compression: This involves shortening of sentences without changing the meaning or context of the summary.

- Summary Evaluation: This process uses metrics to compare the quality of the summary against human-written summaries.

Extractive Summarization Vs Abstractive Summarization

There are two main types of text summarization with AI and the choice between the two depends on the purpose of the summarized text.

- Extractive: As the name suggests, this method identifies main sentences from the original input text and stitch them together to give you a summary. It’s like using a highlighter pen on the original text. This type of summarization is useful when it comes to legal texts, contracts and others where retention of original words is crucial.

- Abstractive: This is more advanced form of summarization where the AI tool comprehends the meaning and context of the input text and generates a summary in fresh text. This is more commonly used to generate summaries for large literary texts, summary of customer reviews etc. where there is more scope for creativity. This type of summary can be shorter and informative but are more challenging to generate with accuracy if there is need to retain any factual data.

How AI Decides What to Include: Signals of Relevance

One of the biggest strengths of AI summarization is its ability to detect what truly matters in a sea of information. Models use multiple signals of relevance, including:

- Keyword Detection: Words and phrases that occur frequently or are domain-specific (such as “revenue,” “diagnosis,” or “deadline”) signal importance.

- Contextual Patterns: Using NLP, AI identifies how words relate to one another, giving priority to cause–effect statements, definitions, or facts.

- Entity Recognition: Proper nouns like names of people, organizations, medications, or locations are flagged as vital details.

- Sentence Position: Sentences at the start of a document, in abstracts, or in executive summaries often carry higher weight.

- Semantic Centrality: AI evaluates which sentences are semantically connected to the main theme, ensuring the big picture is retained.

Together, these signals guide the model in highlighting the core meaning of a document while setting aside filler material.

How AI Decides What to Leave Out: Noise Filtering

Just as important as deciding what to keep is learning what to leave out. AI summarization tools are trained to filter out noise, redundancy, and irrelevant details that do not add value. They do this through:

- Redundancy Detection: If multiple sentences repeat the same idea, AI includes it once and removes the rest.

- Irrelevance Filtering: AI downweighs casual remarks, digressions, or anecdotal details that don’t serve the document’s purpose.

- Bias Control: Since training data can contain bias, some models apply balancing techniques to ensure summaries are objective.

- Discourse Understanding: AI recognizes filler phrases (“as mentioned earlier,” “by the way”) and excludes them from the output.

- Human Feedback Loops: Over time, models are fine-tuned with corrections from human reviewers, so they better understand what users consider noise.

This noise filtering ensures that summaries are not just short, but also meaningful, accurate, and free from distractions.

Limitations of AI Summarization

While AI summarization tools are very effective and useful, you must be aware that they have certain limitations.

- Accuracy: Summaries can only be as good as the input data and if there are discrepancies in the data fed to the system, you can expect inaccurate summaries. Besides, these tools are also susceptible to a phenomenon called ‘AI hallucination’ wherein a generative AI chatbot or computer vision tool, perceives patterns or objects that are non-existent or imperceptible to human observers, creating outputs that are nonsensical or altogether inaccurate.

- Bias: The output can also be biased depending on the data it’s trained on.

- Creativity: AI-generated summaries may not always have the creative touch that can be present in human-generated ones.

Accuracy can be improved, and bias eliminated by carefully pre-processing the datasets the AI gets trained on, and by adopting the human-in-the-loop (HITL) method for constant reviews and oversight.

Conclusion

Although AI lacks human-like intuition, it is skilful in deciding what is important. By combining frequency analysis, contextual understanding, and training based on human feedback, it produces summaries that are clear, concise, and useful.

Hence the next time you glance at an AI-created summary, keep in mind there’s a sophisticated decision-making process behind those few lines that meticulously considered what is to be highlighted and what to be left out.

And for the best implementation of an AI summary tool for your business, feel free to consult with our experts at DeepKnit AI, who have extensive experience in integrating AI tools of different kinds with your legacy systems to give your business the best results.

Why waste time over exhaustive reports? Let AI summarize it for you.

Consult a DeepKnit AI expert to find the right AI summarization tool for you.

Talk to Our AI Experts